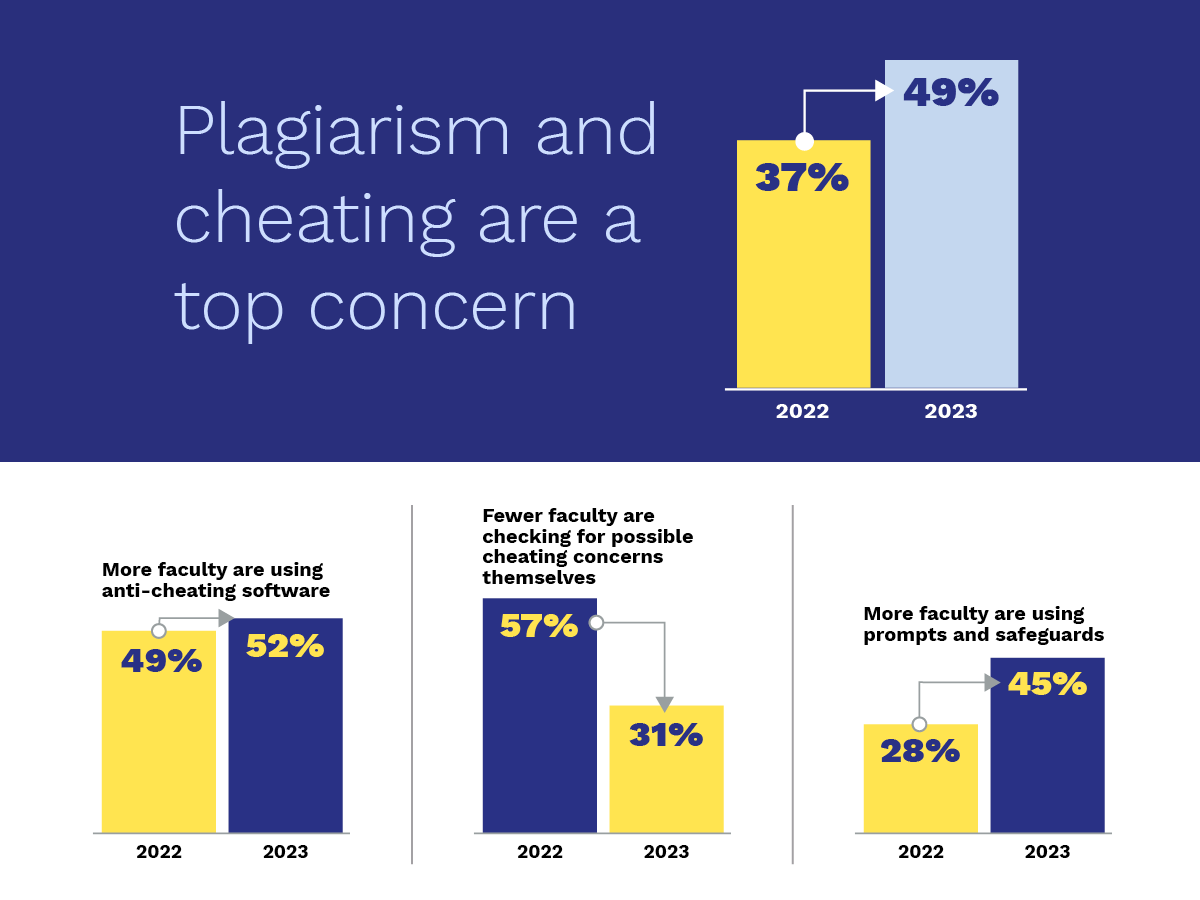

If you’ve worked in higher education for any length of time, you’re well aware that students’ needs and assumptions about higher education have changed in recent years. Our Faces of Faculty report this year supports that assertion. Dealing with changing student norms and expectations was the top challenge reported by the 1,024 faculty members we surveyed at 696 two- and four-year institutions nationwide. When it comes to student norms, cheating is the proverbial elephant in the classroom. And with ChatGPT coming into its own, it’s recently gotten bigger. In fact, 49% of our respondents cited plagiarism and cheating as a challenge, compared to just 37% a year ago. Incredibly, a recent survey of college students showed that some 22% have already used AI tools to complete assignments or even exams, highlighting the scale of the ethical dilemma facing faculty.

Approaches to dealing with the widespread issue of AI-enabled cheating range from hardline, zero-tolerance policies to something resembling bewildered resignation in the face of what may feel like an unstoppable technological force hitting classrooms. Yet, there’s lots of creative problem-solving happening in-between.

How are faculty fighting AI plagiarism in 2023?

- More are using anti-cheating software (52% this year compared to 49% in 2022)

- Many more are using prompts and safeguards (45% this year vs. 28% in 2022)

- Far fewer say they are checking for possible cheating concerns themselves (57% this year vs. 31% in 2022)

- Some say they are are randomizing questions and not allowing backtracking during timed exams

- Some are requiring drafts of papers and asking students questions about their papers to be sure it’s their work

- Others are staying ahead of the AI curve by testing and trying it themselves, or attending webinars on the topic to be sure they know how to use it

In their words

“Due to the creation of ChatGPT, the university is accepting that this type of cheating is going to happen and cannot be fully stopped due to the lack of available software for catching it. It is more or less based on an honor system now.” -Lecturer

“I have used ChatGPT extensively to try to get better at recognizing when students use it.” – Associate Professor

“ChatGPT has made [combating plagiarism] exceedingly difficult. Students submit computer generated work that is unoriginal and earns zero credit. I use [AI-checker websites]. In-class assignments are helpful as students must write something in class and cannot rely on a computer for ‘help.’” – Associate Professor

How are faculty responding?

Generative AI-enabled plagiarism and cheating is a hot-button issue, to say the least, and is understandably a major source of frustration for educators. One faculty member wrote in an opinion piece that, “AI cheating is hopelessly, irreparably corrupting US higher education,” arguing the case that its presence is a threat to critical thinking and the entire learning process.

It’s hard to stay positive in the face of what may seem like an uphill battle to preserve the integrity of higher education. However, the scrappiness of higher education professionals is clear. Faculty members are getting ahead of the curve. They’re growing their own knowledge of AI – not just to fight cheating, but so they can strengthen and personalize their teaching and learning environments to help students.

The need for policy

Less than a third (31%) of students say their instructors, course materials, or school honor codes have explicitly prohibited the use of AI tools, and 60% say their instructors or schools haven’t specified how to use AI tools ethically or responsibly. Clearly written policies from an administration can be helpful tools for faculty in drawing clear lines for students about what is, and what isn’t, acceptable use, and in demystifying the boundaries of a complicated topic. Given the rise in cheating many faculty are experiencing this year alone, such policies seem like a must.

If faculty get such thoughtful policies governing use and parameters of AI on campus, as well as the support they need from their institutions to build their body of knowledge, they and their students will come out stronger on the other end.